There are still a few seats left in this weekend's Making Interactive Prototypes workshop being hosted by Mode Lab and LIFT architects in New York City. Interactive Prototyping is a process which strives to empower the designer – giving them the ability to build and prototype ideas in a more fluid and cost effective manner. To find out more, visit: http://lab.modecollective.nu/lab/making-interactive-prototypes-w-an...

About this Workshop:

It is without a doubt that the design process encompasses many things. From ideation, 3D modeling, programming, and manufacturing to material testing, marketing, life cycle assessment, and cost analysis; the designer is often confronted with many challenges. Prototypes give the designer the ability to test or simulate how a given set of parameters will affect a particular design. Prototyping is inherently iterative in nature and we’re constantly searching for faster more powerful ways to build better prototypes. Interactive Prototyping is a process which strives to empower the designer – giving them the ability to build and prototype ideas in a more fluid and cost effective manner.

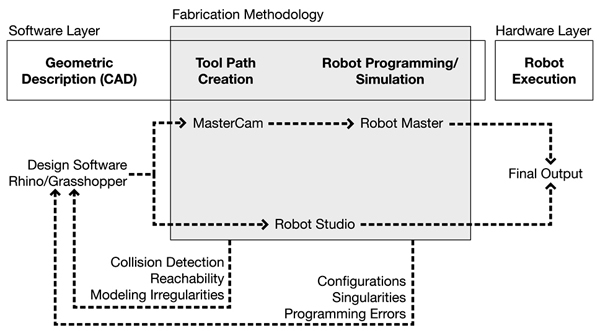

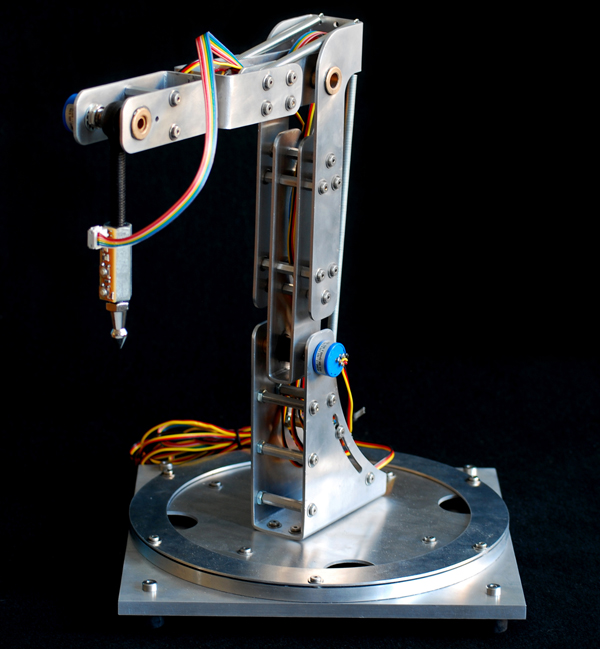

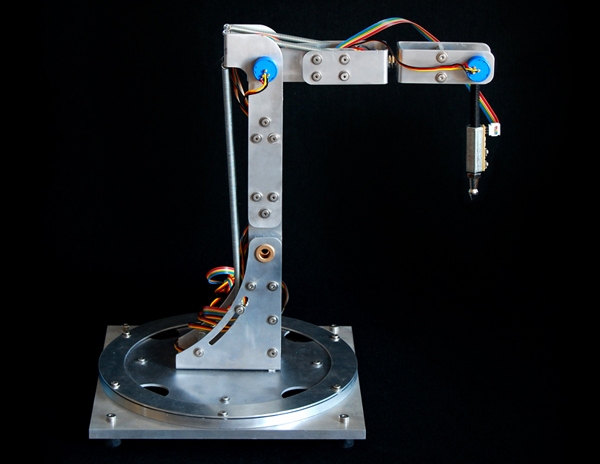

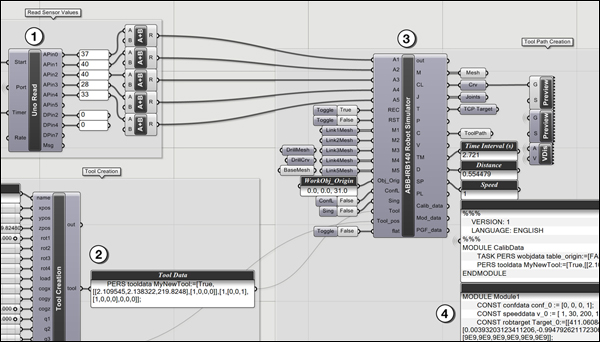

This workshop will focus on hardware and software prototyping techniques; primarily focusing on a wide range of sensing and actuation modalities and the creation of custom built tools in order to create novel interactive prototypes. Using remote sensors, microcontrollers (Arduino), and actuators, we will build virtual and physical prototypes that can communicate with humans and the world around them. There will be particular emphasis on the concept of prototyping – both digitally and physically – as a means to explore intelligent control strategies, material affects, and the parameters which effect dynamic systems. This fast-paced two day workshop will introduce a number of different topics relevant to the domain of prototyping including: introduction to physical computing and electronics, Arduino essentials, circuit design with Fritzing, interactive prototyping with Firefly, computer vision, soldering, and much more.